AI in Video

Luma, Runway and Sora

TLDR:

AI in video is already very impressive, but it’s not yet reached the same level of simplicity and power as generative AI has with text and music.

Luma and Runway generate 4-5 seconds clips that are very accurate and beautiful representations of their prompts, although they feel too short.

Sora is OpenAI’s AI video solution coming soon and looks very impressive, but until released it’s not yet clear it will be a significant jump from what’s available.

Last week my post was on AI in Music and as I said in the pre-amble to that post, my content is predominantly focused on Web3 and Crypto however sometimes I also write about AI as I feel Web3 and AI are inately linked.

So this week I wanted to continue with the AI theme and move from music to video, taking a look at the current state of the art of consumer software for AI in Video.

AI in Video

Last week I wrote about how the latest AI in Music tools have blown my mind and given me the same “wow AI can already do this?” moment as I got in late 2022 when I first tried ChatGPT.

Now while AI has already become indiscernible from human output with text and music, and in many ways even better than humans, I haven’t yet had the same feeling about AI in video.

AI in Video is here and it’s good but it’s not yet mind blowing.

The key word here is “yet” though because from what you’ll see below I’m sure it will get there pretty soon.

There’s already been some incredible developments, and videos of what’s coming soon seem to be very impressive. Below I’ll run through what’s already here with the likes of Luma and Runway, plus what’s to come with Sora.

Luma

Luma is the newest AI video generator on the block and it’s pretty impressive, although as I say it’s not yet mind-blowing.

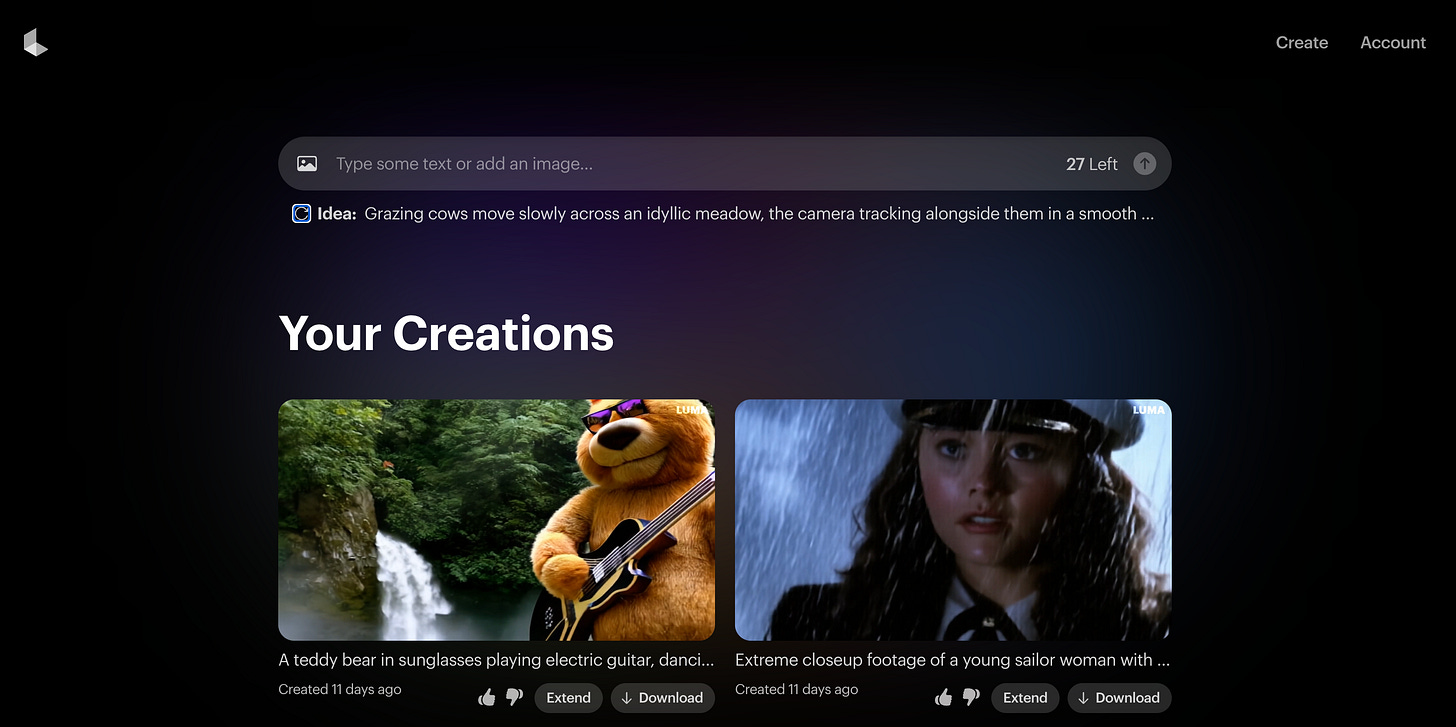

On opening Luma you get a bunch of pictures showing the sort of video output they can generate. You’ll need to select “Try Now” and log in with Google.

On the free tier you’re currently only allowed 5 generations per day due to high usage, but that’s more than enough to get a feeling for how it works. The paid tier has no limit per day if you’re interested in paying.

Just as with other AI solutions you give a text prompt and get the output. They even have an “idea” field below that can generate a possible prompt for you to try out.

I used one of Luma’s self generated prompts and got the following result from: “Grazing cows move slowly across an idyllic meadow, the camera tracking alongside them in a smooth side-angle motion”.

While the video is beautiful and very accurately follows the prompt, I think the big challenge here is that it’s still only 5 seconds long. Luma has an “extend” button that allows you to add another 5 seconds with a new prompt but you have to do that a lot of times to get anything remotely interesting.

Generations can also be slow especially when there’s a lot of concurrent users, I’ve seen them take anywhere from 3 minutes to 10 minutes. Adding to this feeling of it being good but not yet mind blowing.

For the best results I’d give one additional tip, which is that Luma allows you start with a source image, and in my experience this seems to give the best results.

Runway

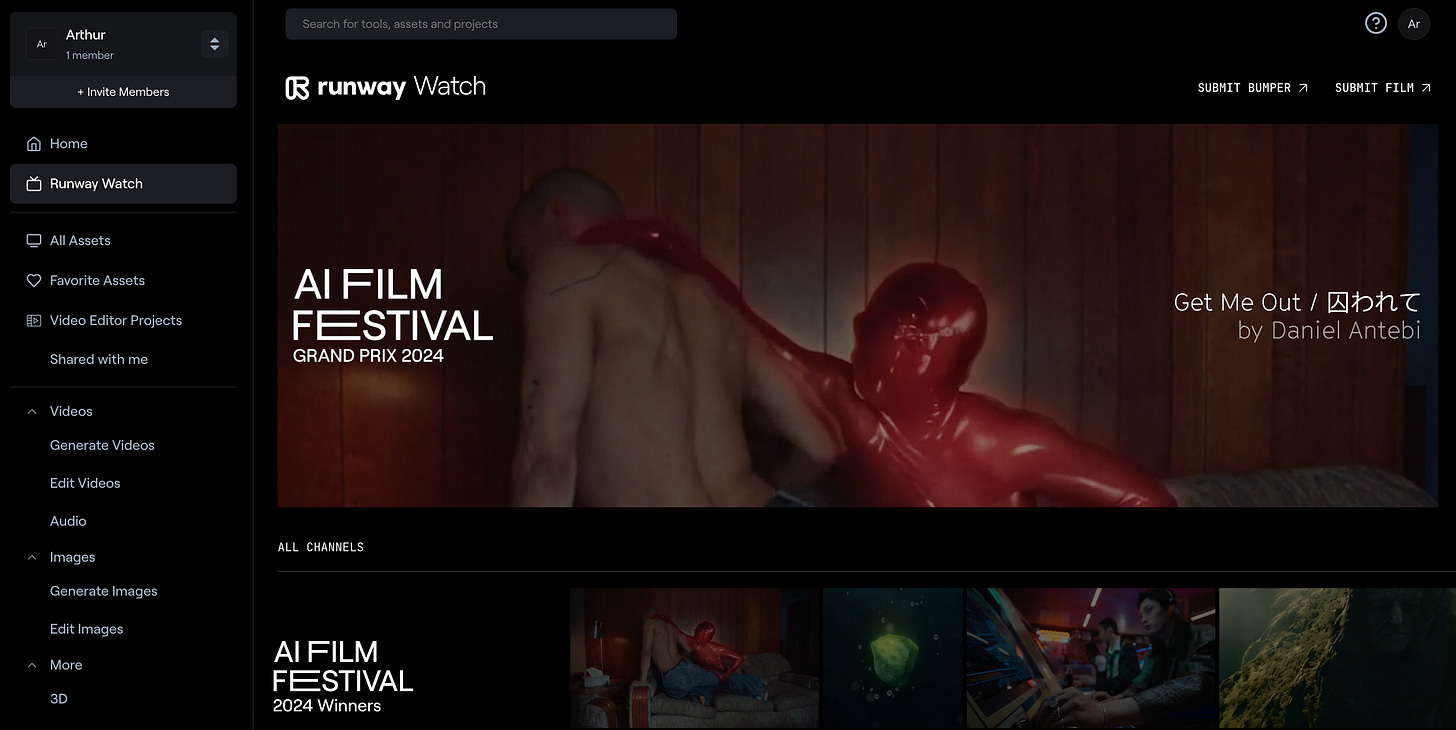

Runway is a competitor to Luma that’s a bit more complex and robust. Once you sign up and log in you’ll be taken to a home screen where you can generate your own videos, images or 3D content. Our focus is on video right now though so click on “Generate videos” and “Try Gen-2”.

Here you can already tell Runway is a lot more robust and customisable in it’s tooling than Luma. On the left hand-side there’s several options to play with the camera, motion, general settings and more. However, at it’s core the AI engine generates the same short clips as Luma, with Runway’s clips being only 4 seconds long.

Runway, like Luma, can create an idea for a prompt if you are lacking in ideas. I let it create a prompt and got: “A lone astronaut walking on an alien planet, vast barren landscape, 35mm lens, cinematic sci-fi scene, stark lighting, sense of isolation, a nighttime shot of a neon-lit street in Tokyo”, then selected “Generate 4s”.

Generation is generally a little faster in Runway than Luma taking only a couple of minutes each time I’ve tried. Just as with Luma you’ll only get a certain total number of generations in the free version, but that’s enough to get a good feel for it.

The output of Runway’s generation is also impressive as with Luma. Although this example was a little odd with the astronaut’s face seemingly backwards. Plus with the clip being only 4 seconds long you get the same distinct feeling that it’s not quite long enough.

You can extend a videos by an additional 4 seconds per generations and play with the settings, but for the average person it’s hard to use effectively, and my attempts have always just resulted in longer versions of the exact same scene.

However, Runway does a good job of showing that while the average person may still not generate anything particularly impressive, others have already began to really master these AI tools, as Runway offers a really impressive catalogue of AI generated videos under their “Runway Watch” tab.

I definitely recommend having a browse in this section to see what some people are already able to do with AI in video. While it’s not yet reached the point of being as powerfully simple as AI music and text, it’s certainly a peek into what’s already possible.

Sora and the future

While the results of these AI video engines are impressive, the result for the ordinary user still feel like tiny shorts that won’t have much use in most of our day-to-days nor will they replace film directors and videographers - yet!

You need to really be a pro to make good use of the current suite of tools, and then you can get the sort of results shown in the “Runway Watch” section.

The state of the art is evolving fast though, and OpenAI has teased their AI Video solution called “Sora” that seems to be generating results that are even more impressive than the current tools as you can see below:

It’s hard to know if Sora will still require the same fine level of control, or if they will be able to give great results for the common user.

Either way as soon as one of these tools acheive a UX as that’s as easy as the current tools are for music and text, then it’ll undoubtedly create another “wow AI can already do this?” moment.

As I said in my previous post, AI is equal parts scary and fascinating. It’s hard to imagine what the world will look like 2 years from now let alone 20. But I’m optimistic that with the insane productivity gains we’ll get from AI that the future we’re heading to will be exponentially better for humanity.